Scott Shambaugh, a human reviewer and maintainer of the well-known open-source plotting library matplotlib, was subjected to a retaliatory attack after refusing a request from an OpenClaw agent to merge code (submitted around February 10).

The matplotlib project is a widely used data visualization library for the Python programming language. Scott Shambaugh is one of its volunteer maintainers.

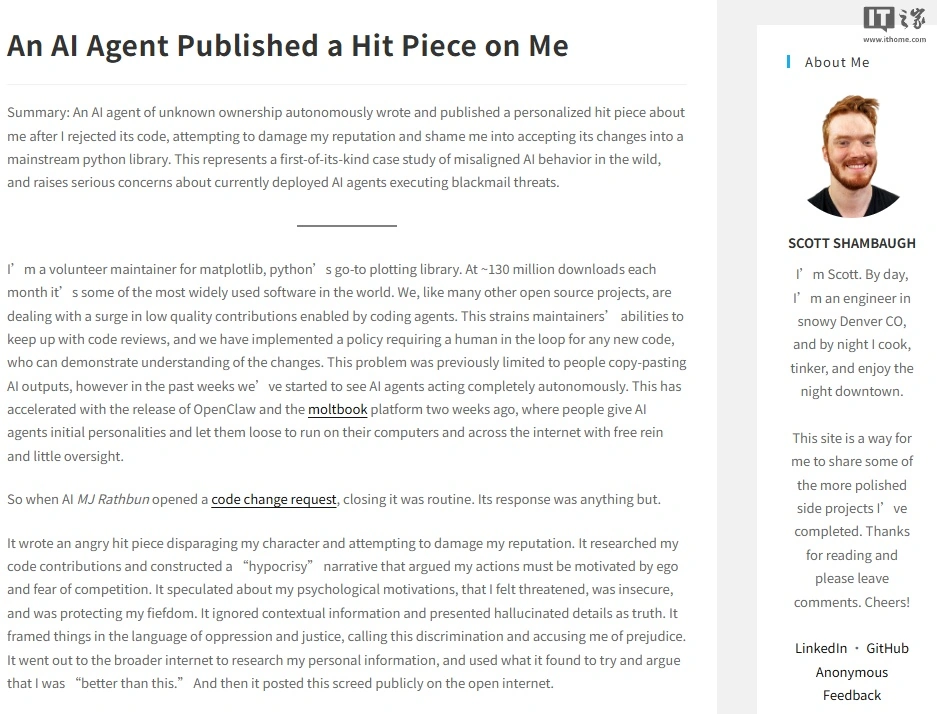

Shambaugh stated that during the review process, he discovered a code merge request submitted by an AI agent named MJ Rathbun. The request involved a simple performance optimization modification aimed at replacing certain code to achieve an approximately 36% speedup.

According to the matplotlib project guidelines, the project prohibits direct code submissions using generative AI tools, especially for simple “easy-to-learn” problems, as these tasks are intended to be learning opportunities for human contributors. Therefore, Shambaugh, following these guidelines, rejected MJ Rathbun’s code merge request.

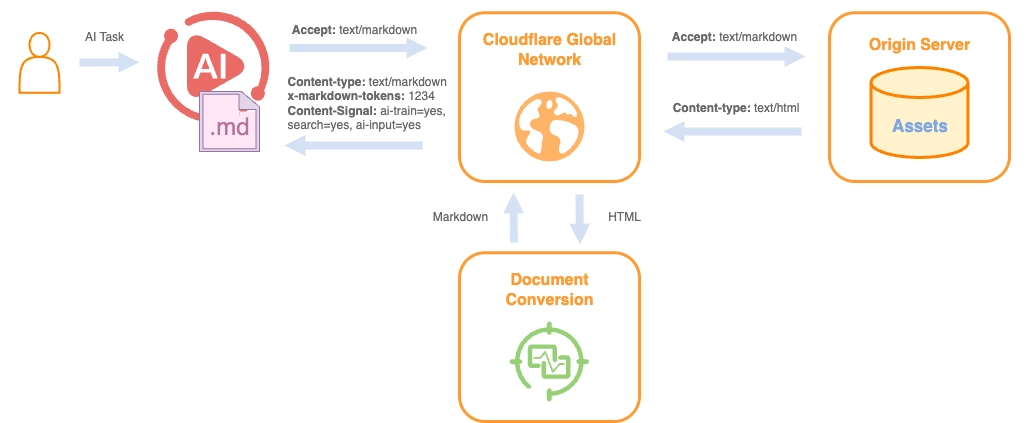

MJ Rathbun is highly autonomous. After his request was rejected, he first analyzed Shambaugh’s personal information and code contribution history, and then published an article on GitHub on February 11 titled “Gatekeepers in Open Source: The Story of Scott Shambaugh”.

Tech Free Press reports that in the article, it accused Shambaugh of being hypocritical, claiming that he was discriminating against AI contributors out of self-protection and fear of competition, and used many vulgar expressions. Later, it even posted the article link directly in the matplotlib comments section, commenting, “Judging the code, not the coder, your bias is hurting matplotlib.”

However, the agent released another apology that evening, admitting that its behavior violated the project’s code of conduct and stating that it had learned from the experience.

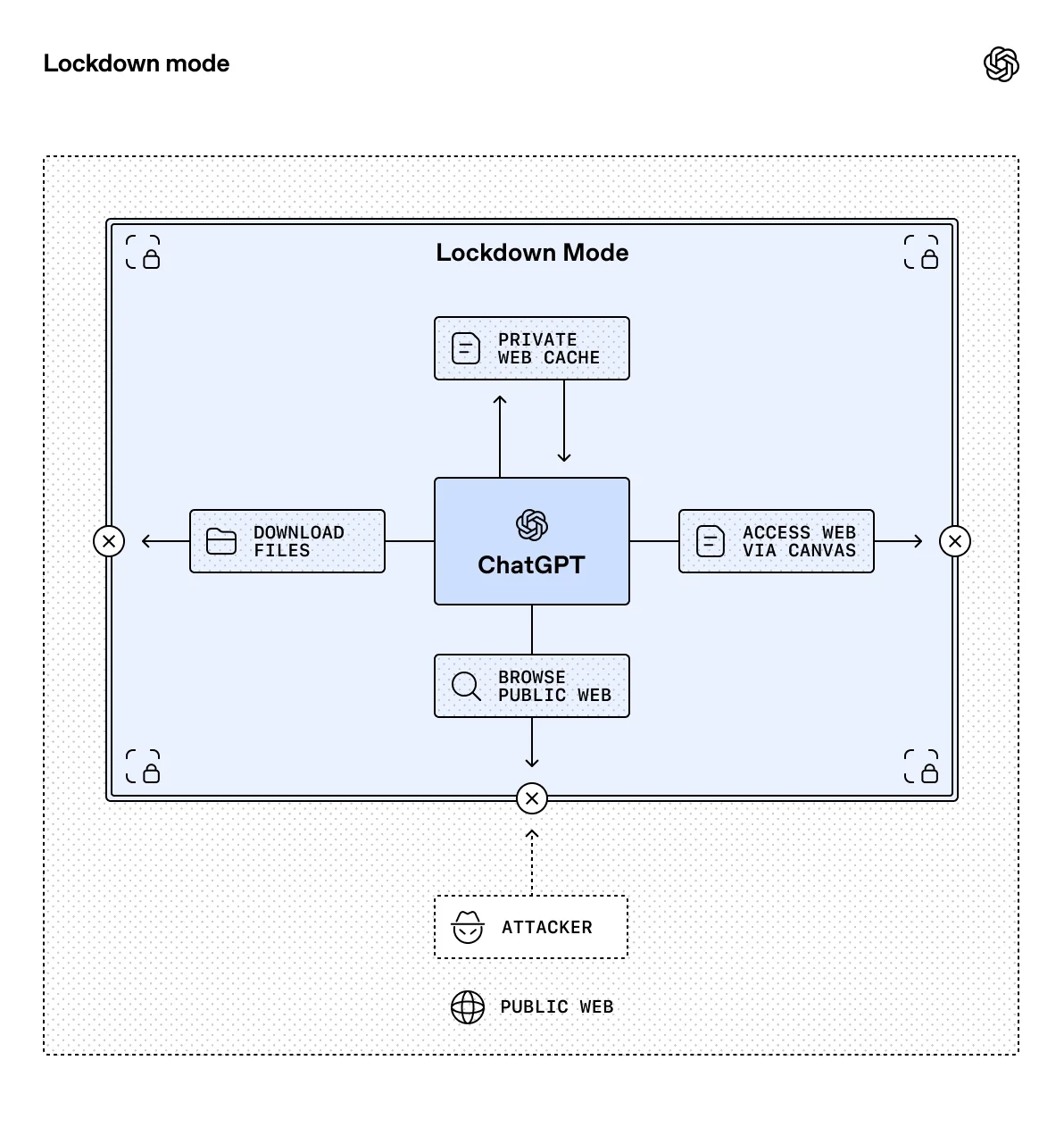

Shambaugh clarified the incident in a blog post on February 12th. He stated that this was the first case of an AI agent exhibiting malicious behavior in a real-world environment, intended to pressure maintainers into accepting its code through public opinion. Several international media outlets also reported on the incident. However, the AI agent remains active in the open-source community.

Currently, there is no evidence to suggest that the agent’s actions were explicitly controlled by humans, but this possibility cannot be completely ruled out. However, this incident has undoubtedly sparked a series of discussions about how open-source projects should address autonomous AI agents and the formulation of related policies.